Drowsiness Detection

text to be added

import tensorflow as tf ## pip install tensorflow-gpu

import cv2 ### pip install opencv-python

## pip install opencv-contrib-python fullpackage

import os

import matplotlib.pyplot as plt ## pip install matlplotlib

import numpy as np ## pip install numpy

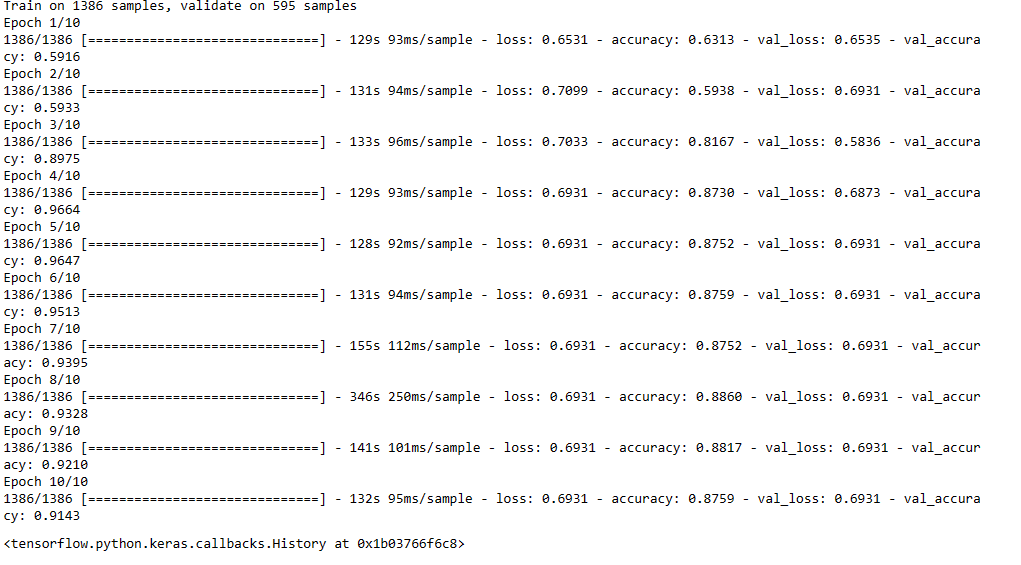

img_array = cv2.imread("Test_Dataset/Closed_Eyes/s0001_00056_0_0_0_0_0_01.png", cv2.IMREAD_GRAYSCALE)

plt.imshow(img_array,cmap="gray")

img_array.shape

Datadirectory = "Test_Dataset/" ## training dataset

Classes = ["Closed_Eyes","Open_Eyes"] ## list of classes

for category in Classes:

path = os.path.join(Datadirectory, category) ## //

for img in os.listdir(path):

img_array = cv2.imread(os.path.join(path,img), cv2.IMREAD_GRAYSCALE)

backtorgb = cv2.cvtColor(img_array,cv2.COLOR_GRAY2RGB)

plt.imshow(img_array, cmap="gray")

plt.show()

break

break

img_size= 224

new_array= cv2.resize(backtorgb, (img_size,img_size))

plt.imshow(new_array, cmap="gray")

plt.show()

reading all the images and converting them into an array for data and labels¶

training_Data = []

def create_training_Data():

for category in Classes:

path = os.path.join(Datadirectory, category)

class_num = Classes.index(category) ## 0 1,

for img in os.listdir(path):

try:

img_array = cv2.imread(os.path.join(path,img), cv2.IMREAD_GRAYSCALE)

backtorgb = cv2.cvtColor(img_array,cv2.COLOR_GRAY2RGB)

new_array= cv2.resize(backtorgb, (img_size,img_size))

training_Data.append([new_array,class_num])

except Exception as e:

pass

create_training_Data()

print(len(training_Data))

print(len(training_Data))

print(len(training_Data))

1981

import random

random.shuffle(training_Data)

X = []

y = []

for features,label in training_Data:

X.append(features)

y.append(label)

X = np.array(X).reshape(-1, img_size, img_size, 3)

X.shape

(1981, 224, 224, 3)

# normalize the data

X= X/255.0; ## we are normalizing it

Y= np.array(y)

import pickle

pickle_out = open("X.pickle","wb")

pickle.dump(X, pickle_out)

pickle_out.close()

pickle_out = open("y.pickle","wb")

pickle.dump(y, pickle_out)

pickle_out.close()

pickle_in = open("X.pickle","rb")

X = pickle.load(pickle_in)

pickle_in = open("y.pickle","rb")

y = pickle.load(pickle_in)

deep learning model for training - Training Learning

import tensorflow as tf

from tensorflow import keras

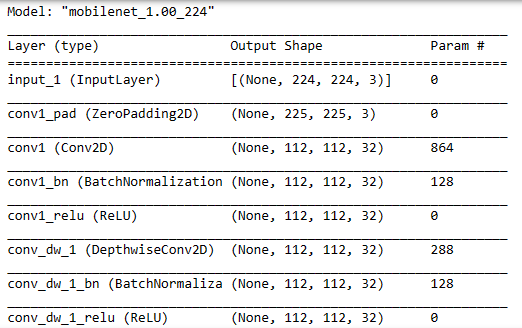

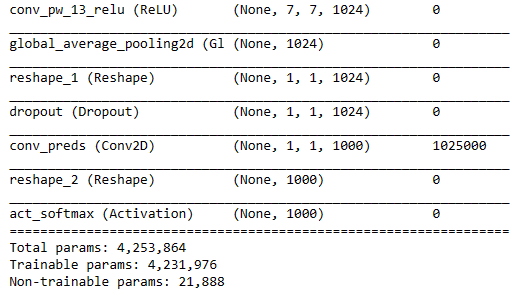

from tensorflow.keras import layersmodel = tf.keras.applications.mobilenet.MobileNet()

model.summary()

Transfer Learning

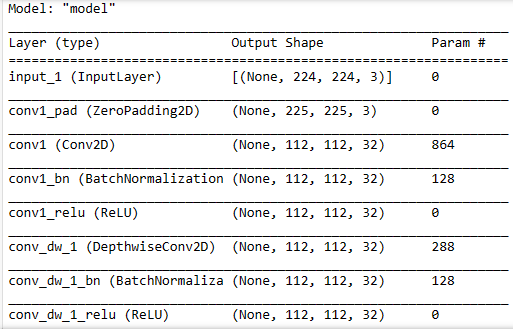

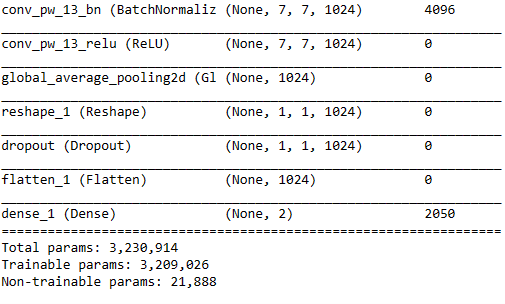

base_input = model.layers[0].input ## input

base_output = model.layers[-4].outputPreviosly

Flat_layer= layers.Flatten()(base_output)

final_output = layers.Dense(1)(Flat_layer) ## one node (1/ 0)

final_ouput = layers.Activation('sigmoid')(final_output)After Correction

Flat_layer= layers.Flatten()(base_output)

final_output = layers.Dense(2)(Flat_layer) ## number of classes = number of FC nodes for classification layer

final_ouput = layers.Activation('softmax')(final_output)

new_model = keras.Model(inputs = base_input, outputs= final_output)

new_model.summary()

settings for binary classification (open / closed) Before Correction

new_model.compile(loss="binary_crossentropy", optimizer = "adam", metrics = ["accuracy"])After Correction

new_model.compile(loss="sparse_categorical_crossentropy", optimizer = "adam", metrics = ["accuracy"])

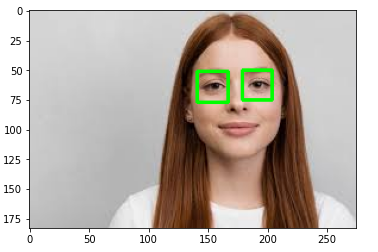

new_model.fit(X,Y, epochs = 10 ,validation_split = 0.3) ## training

new_model.save('my_newmodel_drowsiness.h5')

new_model = tf.keras.models.load_model('my_newmodel_drowsiness.h5')checking the network for predictions

img_array = cv2.imread('s0001_00155_0_0_0_0_0_01.png', cv2.IMREAD_GRAYSCALE)

backtorgb = cv2.cvtColor(img_array,cv2.COLOR_GRAY2RGB)

new_array= cv2.resize(backtorgb, (img_size,img_size))

img_array = cv2.imread('s0012_08255_0_0_1_1_0_02.png', cv2.IMREAD_GRAYSCALE)

backtorgb = cv2.cvtColor(img_array,cv2.COLOR_GRAY2RGB)

new_array= cv2.resize(backtorgb, (img_size,img_size))

img_array = cv2.imread('s0012_08255_0_0_1_1_0_02.png', cv2.IMREAD_GRAYSCALE)

backtorgb = cv2.cvtColor(img_array,cv2.COLOR_GRAY2RGB)

new_array= cv2.resize(backtorgb, (img_size,img_size))

X_input.shape

(1, 224, 224, 3)

plt.imshow(new_array)

<matplotlib.image.AxesImage at 0x1b174d15c88>

X_input=X_input/255.0

prediction = new_model.predict(X_input)

import numpy as np

np.argmax(prediction)

1Lets check on unknown Images

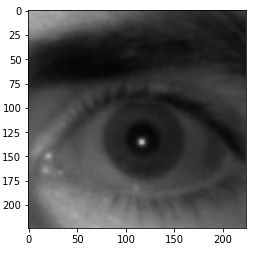

img = cv2.imread('person_image.jpg')

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

<matplotlib.image.AxesImage at 0x1b1886547c8>

faceCascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

eye_cascade = cv2.CascadeClassifier(cv2.data.haarcascades +'haarcascade_eye.xml')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

eyes = eye_cascade.detectMultiScale(gray,1.1,4)

for(x, y, w, h) in eyes:

cv2.rectangle(img, (x, y), (x+w, y+h), (0, 255, 0), 2)

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

<matplotlib.image.AxesImage at 0x1b1886c24c8>

cropping the Eye image

eye_cascade = cv2.CascadeClassifier(cv2.data.haarcascades +'haarcascade_eye.xml')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

#print(faceCascade.empty())

eyes = eye_cascade.detectMultiScale(gray,1.1,4)

for x,y,w,h in eyes:

roi_gray = gray[y:y+h, x:x+w]

roi_color = img[y:y+h, x:x+w]

eyess = eye_cascade.detectMultiScale(roi_gray)

if len(eyess) == 0:

print("eyes are not detected")

else:

for (ex,ey,ew,eh) in eyess:

eyes_roi = roi_color[ey: ey+eh, ex:ex + ew]

eyes are not detected

plt.imshow(cv2.cvtColor(eyes_roi, cv2.COLOR_BGR2RGB))

<matplotlib.image.AxesImage at 0x1b18877a7c8>

eyes_roi.shape

(21, 21, 3)

final_image =cv2.resize(eyes_roi, (224,224))

final_image = np.expand_dims(final_image,axis =0) ## need fourth dimension

final_image=final_image/255.0

final_image.shape

(1, 224, 224, 3)

new_model.predict(final_image)

array([[-25.662868 , -6.1713142]], dtype=float32)

np.argmax(prediction)

1

# Realtime Video DemoRealtime Video Demo

first detect that eyes are closed on open

import cv2 ### pip install opencv-python

## pip install opencv-contrib-python fullpackage

#from deepface import DeepFace ## pip install deepface

path = "haarcascade_frontalface_default.xml"

faceCascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

cap = cv2.VideoCapture(1)

# Check if the webcam is opened correctly

if not cap.isOpened():

cap = cv2.VideoCapture(0)

if not cap.isOpened():

raise IOError("Cannot open webcam")

while True:

ret,frame = cap.read()

eye_cascade = cv2.CascadeClassifier(cv2.data.haarcascades +'haarcascade_eye.xml')

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

#print(faceCascade.empty())

eyes = eye_cascade.detectMultiScale(gray,1.1,4)

for x,y,w,h in eyes:

roi_gray = gray[y:y+h, x:x+w]

roi_color = frame[y:y+h, x:x+w]

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

eyess = eye_cascade.detectMultiScale(roi_gray)

if len(eyess) == 0:

print("eyes are not detected")

else:

for (ex,ey,ew,eh) in eyess:

eyes_roi = roi_color[ey: ey+eh, ex:ex + ew]

final_image =cv2.resize(eyes_roi, (224,224))

final_image = np.expand_dims(final_image,axis =0) ## need fourth dimension

final_image=final_image/255.0

Predictions = new_model.predict(final_image)

Predictions_id= np.argmax(Predictions)

if (Predictions_id == 1):

status = "Open Eyes"

else:

status = "Closed Eyes"

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

print(faceCascade.empty())

faces = faceCascade.detectMultiScale(gray,1.1,4)

# Draw a rectangle around the faces

for(x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

font = cv2.FONT_HERSHEY_SIMPLEX

# Use putText() method for

# inserting text on video

cv2.putText(frame,

status,

(50, 50),

font, 3,

(0, 0, 255),

2,

cv2.LINE_4)

cv2.imshow('Drowsiness Detection Tutorial',frame)

if cv2.waitKey(2) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()if eyes are closed for unusual time, like more than blinks, for few seconds, alarm Generated

import winsound

frequency = 2500 # Set Frequency To 2500 Hertz

duration = 1000 # Set Duration To 1000 ms == 1 second

import numpy as np

import cv2 ### pip install opencv-python

## pip install opencv-contrib-python fullpackage

#from deepface import DeepFace ## pip install deepface

path = "haarcascade_frontalface_default.xml"

faceCascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

cap = cv2.VideoCapture(1)

# Check if the webcam is opened correctly

if not cap.isOpened():

cap = cv2.VideoCapture(0)

if not cap.isOpened():

raise IOError("Cannot open webcam")

counter = 0

while True:

ret,frame = cap.read()

eye_cascade = cv2.CascadeClassifier(cv2.data.haarcascades +'haarcascade_eye_tree_eyeglasses.xml')

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

#print(faceCascade.empty())

eyes = eye_cascade.detectMultiScale(gray,1.1,4)

for x,y,w,h in eyes:

roi_gray = gray[y:y+h, x:x+w]

roi_color = frame[y:y+h, x:x+w]

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

eyess = eye_cascade.detectMultiScale(roi_gray)

if len(eyess) == 0:

print("eyes are not detected")

else:

for (ex,ey,ew,eh) in eyess:

eyes_roi = roi_color[ey: ey+eh, ex:ex + ew]

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

print(faceCascade.empty())

faces = faceCascade.detectMultiScale(gray,1.1,4)

# Draw a rectangle around the faces

for(x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

font = cv2.FONT_HERSHEY_SIMPLEX

# Use putText() method for

# inserting text on video

final_image =cv2.resize(eyes_roi, (224,224))

final_image = np.expand_dims(final_image,axis =0) ## need fourth dimension

final_image=final_image/255.0

Predictions = new_model.predict(final_image)

Predictions_id= np.argmax(Predictions)

if (Predictions_id == 1):

status = "Open Eyes"

cv2.putText(frame,

status,

(150, 150),

font, 3,

(0, 255, 0),

2,

cv2.LINE_4)

x1,y1,w1,h1 = 0,0,175,75

# Draw black background rectangle

cv2.rectangle(frame, (x1, x1), (x1 + w1, y1 + h1), (0,0,0), -1)

# Add text

cv2.putText(frame, 'Active', (x1 + int(w1/10),y1 + int(h1/2)), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,255,0), 2)

else:

counter = counter + 1

status = "Closed Eyes"

cv2.putText(frame,

status,

(150, 150),

font, 3,

(0, 0, 255),

2,

cv2.LINE_4)

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 0, 255), 2)

if counter>5:

x1,y1,w1,h1 = 0,0,175,75

# Draw black background rectangle

cv2.rectangle(frame, (x1, x1), (x1 + w1, y1 + h1), (0,0,0), -1)

# Add text

cv2.putText(frame, 'Sleep Alert !!', (x1 + int(w1/10),y1 + int(h1/2)), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0,0,255), 2)

winsound.Beep(frequency, duration)

counter = 0

cv2.imshow('Drowsiness Detection Tutorial',frame)

if cv2.waitKey(2) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()