Face Emotion Recognition

import cv2 ### pip install opencv-python

## pip install opencv-contrib-python fullpackage This code install …

from deepface import DeepFace ## pip install deepfaceimg = cv2.imread('happyboy.jpg')

plt.imshow(img)

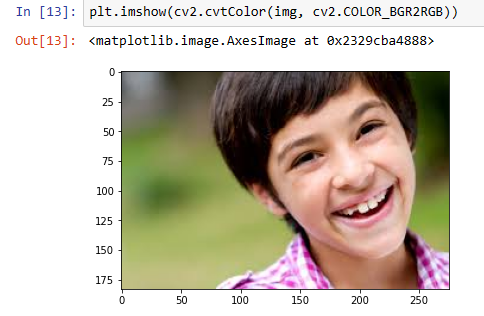

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

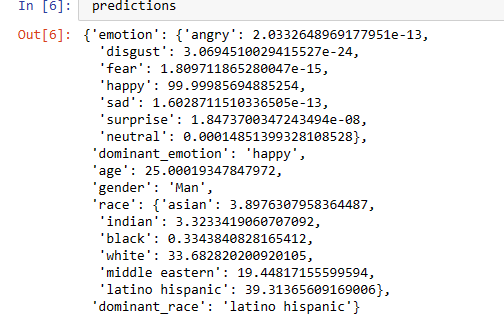

predictions = DeepFace.analyze(img)

predictions = DeepFace.analyze(img, actions =['emotion'])

predictions['dominant_emotion']

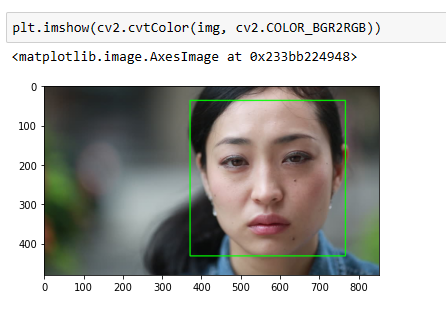

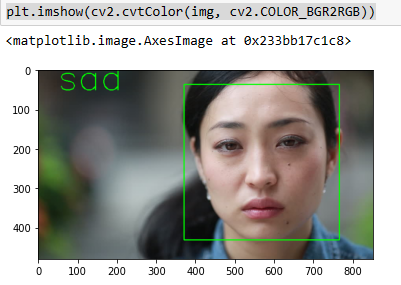

img = cv2.imread('sad_women.jpg')

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

predictions = DeepFace.analyze(img)

faceCascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

#print(faceCascade.empty())

faces = faceCascade.detectMultiScale(gray,1.1,4)

# Draw a rectangle around the faces

for(x, y, w, h) in faces:

cv2.rectangle(img, (x, y), (x+w, y+h), (0, 255, 0), 2)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

#print(faceCascade.empty())

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

font = cv2.FONT_HERSHEY_SIMPLEX

# Use putText() method for

# inserting text on video

cv2.putText(img,

predictions['dominant_emotion'],

(50, 50),

font, 3,

(0, 255, 0),

2,

cv2.LINE_4) ;

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

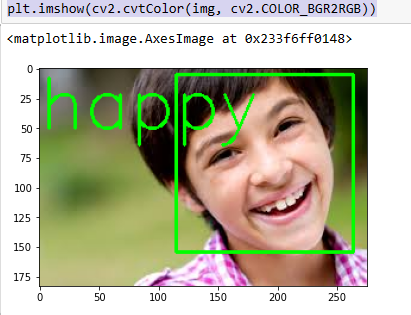

img = cv2.imread('happyboy.jpg')

predictions = DeepFace.analyze(img, actions =['emotion'])

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

#print(faceCascade.empty())

faces = faceCascade.detectMultiScale(gray,1.1,4)

# Draw a rectangle around the faces

for(x, y, w, h) in faces:

cv2.rectangle(img, (x, y), (x+w, y+h), (0, 255, 0), 2)

font = cv2.FONT_HERSHEY_SIMPLEX

# Use putText() method for

# inserting text on video

cv2.putText(img,

predictions['dominant_emotion'],

(0,50),

font, 2,

(0, 255, 0),

2,

cv2.LINE_4) ;

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

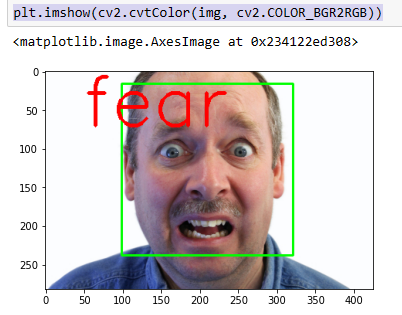

img = cv2.imread('feared_man.jpg')

predictions = DeepFace.analyze(img, actions =['emotion'])

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

#print(faceCascade.empty())

faces = faceCascade.detectMultiScale(gray,1.1,4)

# Draw a rectangle around the faces

for(x, y, w, h) in faces:

cv2.rectangle(img, (x, y), (x+w, y+h), (0, 255, 0), 2)

font = cv2.FONT_HERSHEY_SIMPLEX

# Use putText() method for

# inserting text on video

cv2.putText(img,

predictions['dominant_emotion'],

(50,70),

font, 3,

(0, 0, 255),

4,

cv2.LINE_4) ;

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

Code for Live Video Demo

import cv2 ### pip install opencv-python

## pip install opencv-contrib-python fullpackage

from deepface import DeepFace ## pip install deepface

path = "haarcascade_frontalface_default.xml"

faceCascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

cap = cv2.VideoCapture(1)

# Check if the webcam is opened correctly

if not cap.isOpened():

cap = cv2.VideoCapture(0)

if not cap.isOpened():

raise IOError("Cannot open webcam")

while True:

ret,frame = cap.read()

result = DeepFace.analyze(frame, actions = ['emotion'])

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

#print(faceCascade.empty())

faces = faceCascade.detectMultiScale(gray,1.1,4)

# Draw a rectangle around the faces

for(x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

font = cv2.FONT_HERSHEY_SIMPLEX

# Use putText() method for

# inserting text on video

cv2.putText(frame,

result['dominant_emotion'],

(50, 50),

font, 3,

(0, 0, 255),

2,

cv2.LINE_4)

cv2.imshow('Original video',frame)

if cv2.waitKey(2) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()